Looks like Didier Sornette has a new pre-print out on the arXiv. I’ve only had a minute or two to scan the paper, but it looks like they’ve slightly modified their JLS model to fit to the repo market to measure the “bubblieness” of leverage. They claim this allows them to some successful prediction, and make sure the reader connects this to the recent chatter at the Reserve and in Dodd-Frank on “detecting” bubbles or crises.

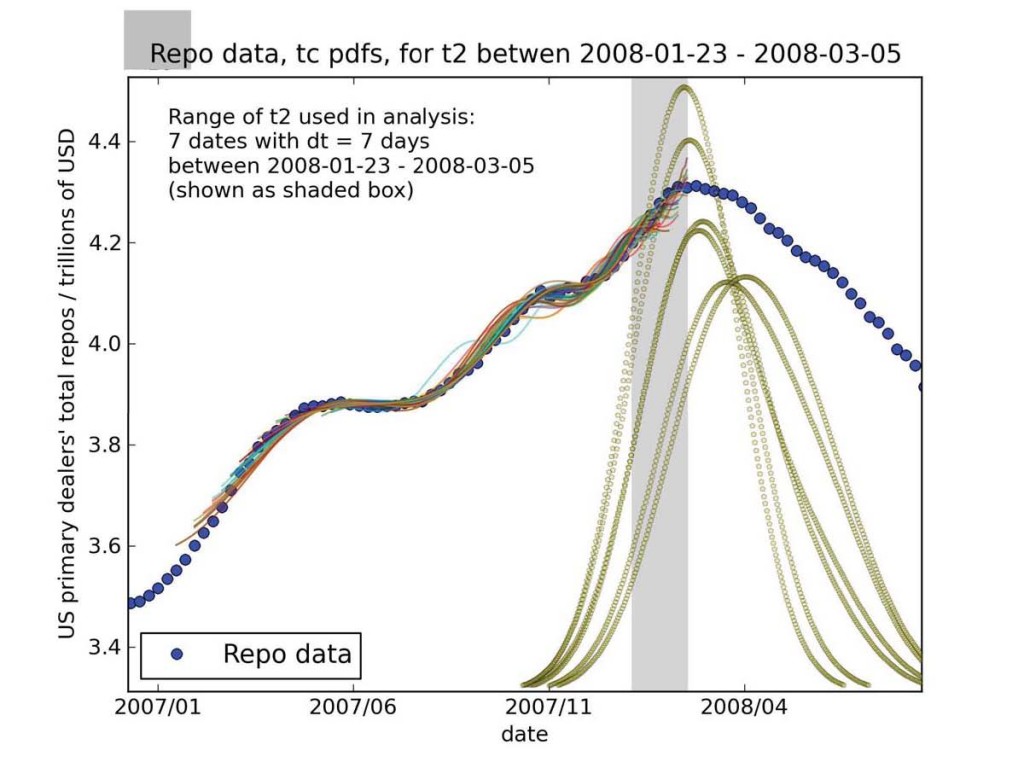

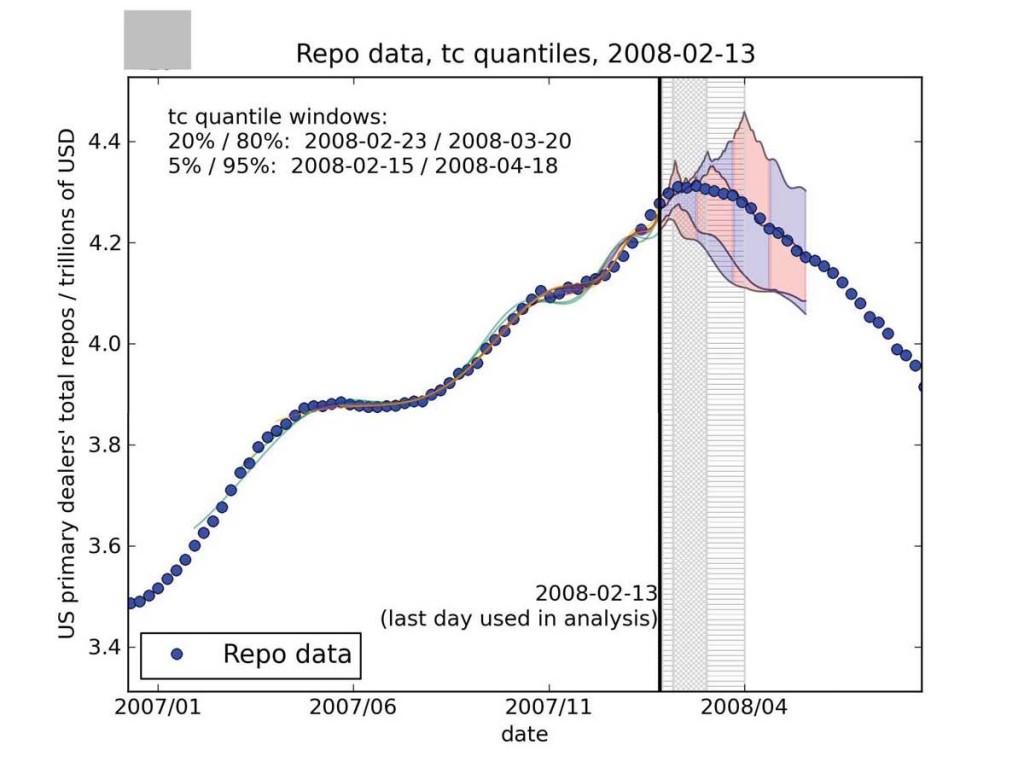

Abstract: Leverage is strongly related to liquidity in a market and lack of liquidity is considered a cause and/or consequence of the recent financial crisis. A repurchase agreement is a financial instrument where a security is sold simultaneously with an agreement to buy it back at a later date. Repurchase agreements (repos) market size is a very important element in calculating the overall leverage in a financial market. Therefore, studying the behavior of repos market size can help to understand a process that can contribute to the birth of a financial crisis. We hypothesize that herding behavior among large investors led to massive over-leveraging through the use of repos, resulting in a bubble (built up over the previous years) and subsequent crash in this market in early 2008. We use the Johansen-Ledoit-Sornette (JLS) model of rational expectation bubbles and behavioral finance to study the dynamics of the repo market that led to the crash. The JLS model qualifies a bubble by the presence of characteristic patterns in the price dynamics, called log-periodic power law (LPPL) behavior. We show that there was significant LPPL behavior in the market before that crash and that the predicted range of times predicted by the model for the end of the bubble is consistent with the observations.

Citation: W. Yan, R. Woodard, D. Sornette. Leverage Bubble. arXiv:1011.0458.

I also noticed that two of the EPS figures didn’t make it through arXiv’s compilation, so I’ve uploaded them here.