I’ve been offline for a few days wrapping up some contracts and academic work, but I wanted to highlight an exciting paper that Dan and I have been working on – Measuring the Complexity of Law: The United States Code. This law review is a thorough description of our method for measuring legal complexity and is the counterpart to A Mathematical Approach to the Study of the United States Code, recently published in Physica A. Given the recent chatter on possible tax reform and simplification lately, Tax Code complexity may be popular topics in the near future. The paper isn’t public yet, but you can read the abstract below:

Abstract: The complexity of law is an issue relevant to all who study legal systems. In addressing this issue, scholars have taken approaches ranging from descriptive accounts to theoretical models. Preeminent examples of this literature include Long & Swingen (1987), McCaffery (1990), Schuck (1992), White (1992), Kaplow (1995), Epstein (1997), Kades (1997), Wright (2000), Holz (2007) and Bourcier & Mazzega (2007). Despite the significant contributions made by these and many other authors, a review of the literature demonstrates an overall lack of empirical scholarship.

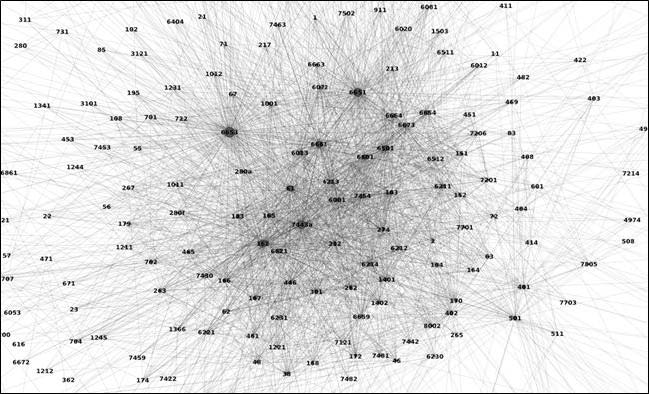

In this paper, we address this empirical gap by focusing on the United States Code (“Code”). Though only a small portion of existing law, the Code is an important and representative document, familiar to both legal scholars and average citizens. In published form, the Code contains hundreds of thousands of provisions and tens of millions of words; it is clearly complex. Measuring this complexity, however, is not a trivial task. To do so, we borrow concepts and tools from a range of disciplines, including computer science, linguistics, physics, and psychology.

Our goals are two-fold. First, we design a conceptual framework capable of measuring the complexity of legal systems. Our conceptual framework is anchored to a model of the Code as the object of a knowledge acquisition protocol. Knowledge acquisition, a field at the intersection of psychology and computer science, studies the protocols individuals use to acquire, store, and analyze information. We develop a protocol for the Code and find that its structure, language, and interdependence primarily determine its complexity.

Second, having developed this conceptual framework, we empirically measure the structure, language, and interdependence of the Code’s forty-nine active Titles. We combine these measurements to calculate a composite measure that scores the relative complexity of these Titles. This composite measure simultaneously takes into account contributions made by the structure, language, and interdependence of each Title through the use of weighted ranks. Weighted ranks are commonly used to pool or score objects with multidimensional or nonlinear attributes. Furthermore, our weighted rank framework is flexible, intuitive, and entirely transparent, allowing other researchers to quickly replicate or extend our work. Using this framework, we provide simple examples of empirically supported claims about the relative complexity of Titles.

In sum, this paper posits the first conceptually rigorous and empirical framework for addressing the complexity of law. We identify structure, language, and interdependence as the conceptual contributors to complexity. We then measure these contributions across all forty-nine active Titles in the Code and obtain relative complexity rankings. Our analysis suggests several additional domains of application, such as contracts, treaties, administrative regulations, municipal codes, and state law.

D.M. Katz, M. J. Bommarito II. Measuring the Complexity of Law: The United States Code..